In this retrospective we look at our engagement with Corn protocol looking at some of the security vulnerabilities discovered and some key takeaways that allowed the team to build a more easily maintainable/upgradeable fuzzing suite.

Introduction to Corn

Corn is an L2 network that provides infrastructure for using Bitcoin as a collateral asset on Ethereum. Using the low transaction cost of Ethereum and the security of the Bitcoin L1, users can apply their Bitcoin capital in DeFi applications on Ethereum.

Corn is centered around Bitcorn (BTCN) which is an ERC20 token pegged to BTC. The primary contract under review in this engagement was the CornSilo which allows users to deposit assets to earn points on them via Bitcorn, then bridge them to the Corn network once the network is live.

Work Done

The Corn engagement lasted 2 months and included the following:

A dedicated fuzzing engineer, Lourens

40 hours of Invariant Bootstrapping implemented by Alex The Entreprenerd

This article recaps the first month, where the team setup and ran an invariant tester for the core repository.

Fuzzing Takeaways

Throughout the course of the fuzzing portion of the engagement there were some unique approaches and development best practices that were implemented that we would like to share with the community here.

1. Generalize as much as possible

At the time of the review, the current SwapFacility contract was still under development so the fuzz testing scaffolding implemented by the Recon team had to be made generic enough so that it could work with different implementations of the contract logic, requiring only small changes in the target function argument values to allow the tests to work with a different interface.

2. Separation of concerns

In terms of developing a fuzzing suite generally, the Recon team structures the functions targeted by the fuzzer into one of three categories: public/external user facing functions, admin functions and targets to test doomsday scenarios (events that should never occur or only occur under specific conditions).

This allows for separation of concerns, making it easier to locate tests for given functionalities and makes the test suite easier to maintain over time as more tests are added by various contributors.

3. Reduce runs when possible

Specifically for the SwapFacility contract the team decided to implement all invariant tests as assertion tests directly in the target functions contract. This is counter to the implementation of the standard Recon harness where invariants are tested using a combination of assertions defined in a TargetFunctions contract and boolean properties defined in a Properties contract (for more on the standard Recon harness see this post).

In this case, because the invariants being tested could all be defined using assertion tests the choice was made to define them as such because it would allow greater simplicity when running extended jobs as Medusa is capable of running in assertion and property testing mode simultaneously, but Echidna is only capable of running in assertion or property testing mode.

This allowed the team to use our preferred method of running the faster (but experimental) Medusa initially to achieve coverage, then switching to Echidna for longer duration runs with a greater guarantee of their correctness while only having to perform a run using one testing mode.

4. Share setups when possible

In this engagement the intention was to create a testing suite that would allow locally evaluating the primary system invariants, and also a forked testing suite which allowed testing invariants dependent on integrating contracts (primarily those related to dust and fees).

To simplify the test suite interface as much as possible and achieve this goal of local + forked testing, the suite used a shared setUp function for deploying the system contracts which was then extended with a _setUpFork function which set values for deployed dependency contracts. This worked by overriding existing locally deployed Corn system contracts where necessary with new deployments that had these dependency contracts passed in.

Because some parts of the system (SiloZap) had invariants that were only testable with fork testing, the following modifier was implemented that ensured its target functions would never be called in local testing:

This modifier checks that the SiloZap deployed locally in the shared setup is overwritten with the version deployed with forked values (only true when the forked setup is being used).

5. Optimistic accounting

In the deposit and withdraw target functions, normally the delta of user balances would be stored with a tracking ghost variable.

In this case however, since it was recognized that the implementation logic reverts the function call if the amounts passed in are invalid, the team implemented “optimistic accounting” to sum the amounts passed in with an accumulator tracking variable.

6. Pranks > Mocks

When implementing fork testing a common issue is how to provide a testing actor with a sufficient amount of a given ERC20 token for them to execute function calls.

Given that the minting logic for ERC20s varies on a token by token basis, directly calling mint on the token to supply a given user is not as trivial as it may first appear and often requires creating a mock that correctly implements the mocked token’s interface. If any logic is introduced in the mock that causes it to behave differently from the actual implementation token this could result in the fuzz testing suite not properly emulating the full system functionality and therefore potentially leave security bugs undiscovered.

In this engagement the decision was therefore taken to use a holder of a large amount of tokens (whale) on the forked network to transfer tokens to the testing actor. Given that the logic of transferring and approving is outlined by the ERC20 standard we could therefore test system functionality with greater certainty that it matches the actual system behavior (see this great tool by codeislight for doing just this).

Fuzzing Trophies

The following “trophies” (vulnerabilities/unexpected behaviors) were discovered in the fuzzing portion of the engagement.

Incorrect Fee Math

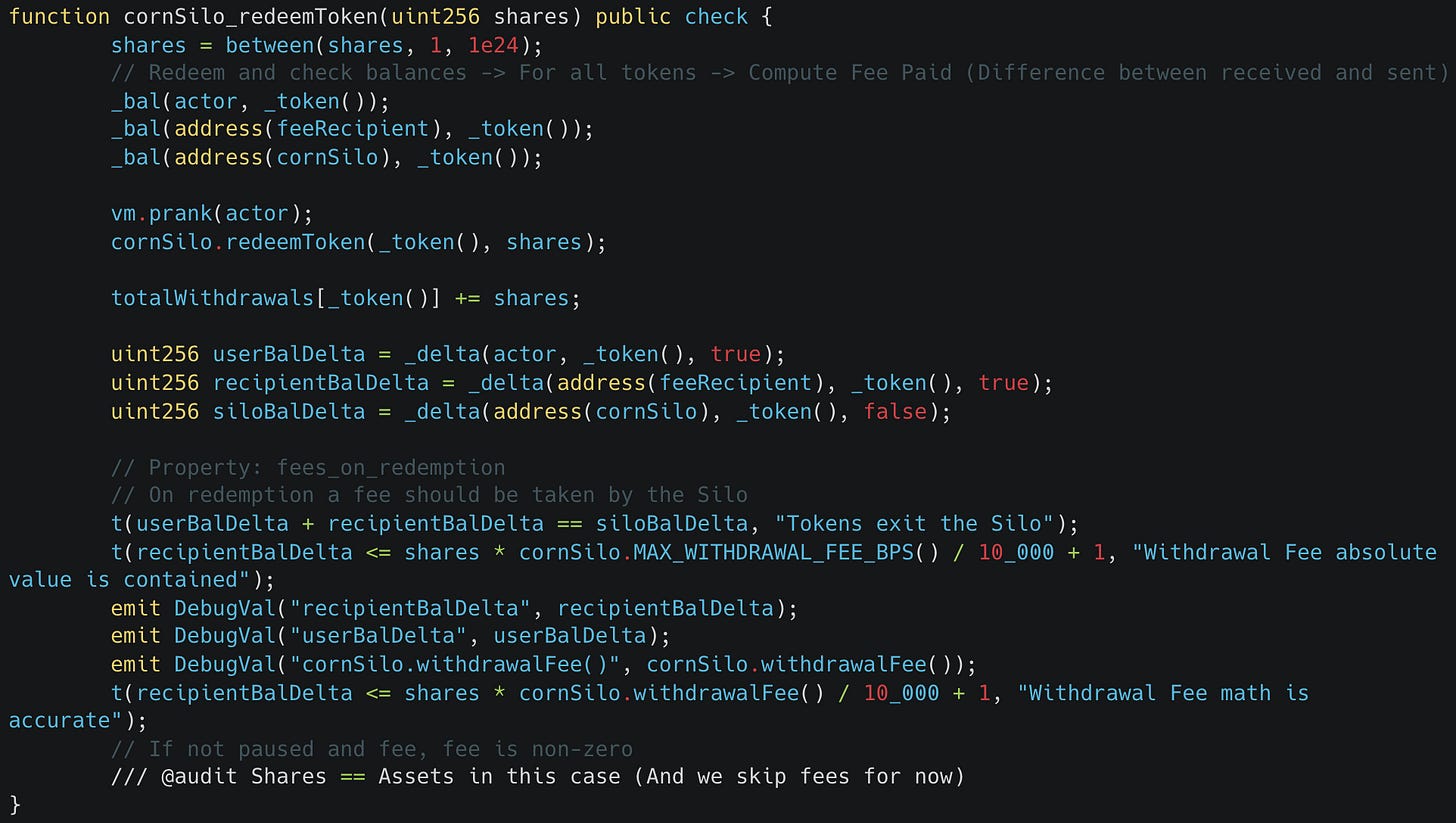

The following stateful fuzz tests identified the usage of an incorrect formula used for fee calculation as the received fees were not what was expected by the ghost variables.

Logs

https://staging.getrecon.xyz/shares/9653d22a-e2e1-453c-8c67-b23912a578e3

Repro

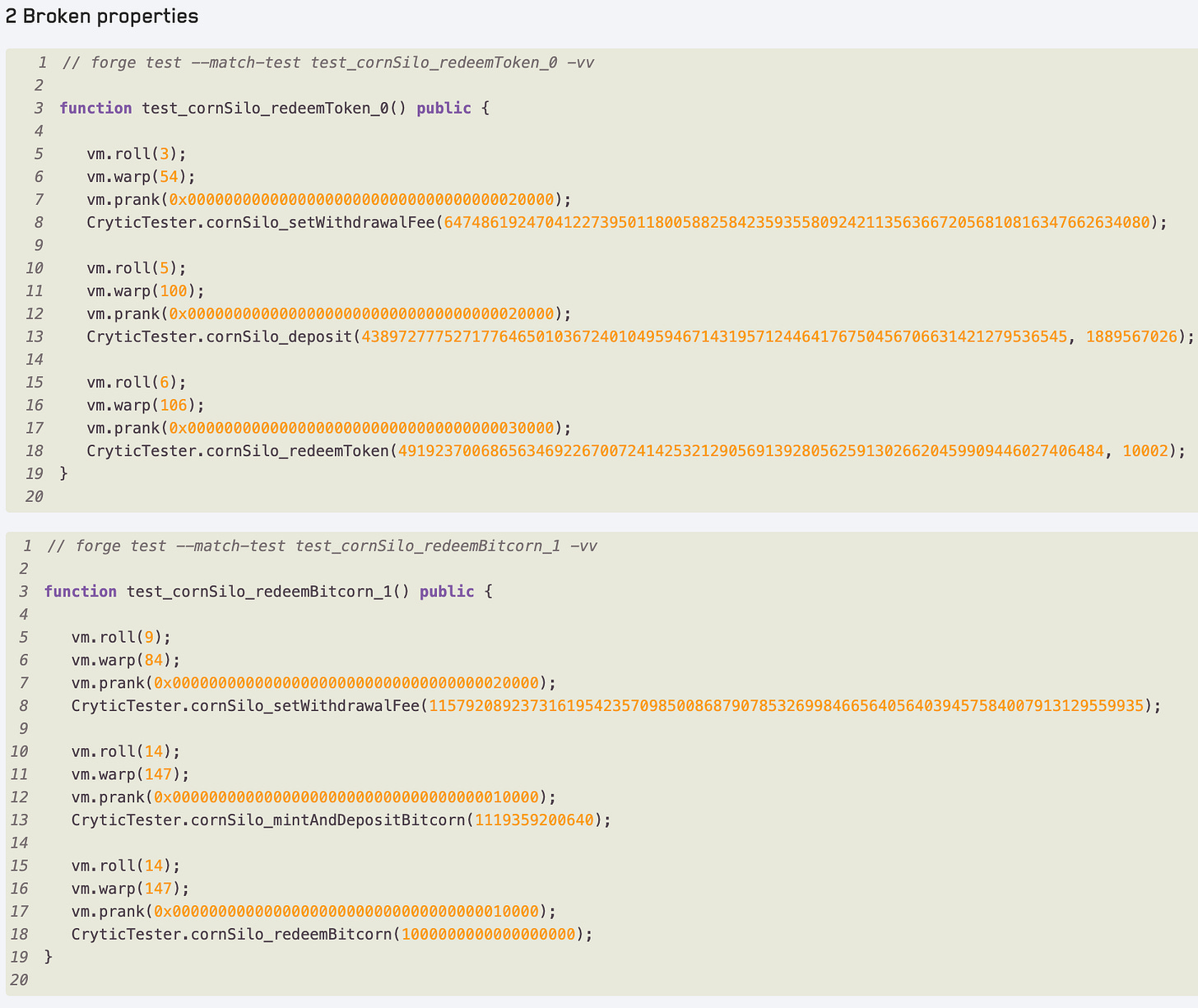

From the output of our Medusa run we get the following tests generated from the broken properties to reproduce them locally with Foundry for debugging:

Silo Insolvency

An insolvency bug identified in the manual review was remediated and the following State Properties were implemented as a further check to ensure that the silo cannot become insolvent:

Conclusion

Manually reviews can be invaluable in identifying vulnerabilities in a system, but when the manual reviewers are fuzzing specialists, this can allow novel vulnerabilities to be uncovered and implement properties that confirm that remediations to vulnerabilities found in the manual review do in fact hold. Additionally, properties implemented early on in development can confirm system behaviors so that any later changes made during development that break the expected behavior can be easily identified.

If you've made it this far you value the security of your protocol.

At Recon we offer boutique audits powered by invariant testing. With this you get the best of both worlds, a security audit done by an elite researcher paired with a cutting edge invariant testing suite.

Sound interesting? Reach out to us here: